Testing packet loss in HTTP protocols

Compared to HTTP/1, HTTP/2 uses far fewer TCP connections, allowing clients to fire multiple HTTP requests at once. This eliminates TCP overhead and makes the protocol faster. It's common to do hundreds of requests over a single connection.

But what if a packet is lost? If a single packet is dropped, the entire TCP connection is stuck until the lost packet is retransmitted.

As you can imagine, retransmission is much faster for a single request rather than hundreds. This is a well-known problem called "TCP head-of-line blocking" (HoL). As packet loss increases, HTTP/1 can outperform HTTP/2 because HoL blocks many more packets (HTTP requests).

HoL is something that can be tested in a very simple way. We need only:

- A Linux environment

- An HTTP/2 server

- A load testing tool

Setup packet loss

tc is traffic-control utility used to configure the linux packet scheduler.

Let's set a desired packet loss for the root queuing discipline (qdisc) for the local lo interface.

tc qdisc add dev lo root netem loss ${loss}%

To unset loss just delete the setting:

tc qdisc del dev lo root netem loss

Optionally, network-namespace the environment:

# create a namespace

ip netns add hol-test

# setup host interface

ip link add type veth

ip addr add 10.0.0.1/24 dev veth0

ip link set dev veth0 up

# setup namespace interace

ip link set veth1 netns hol-test

ip -n hol-test addr add 10.0.0.2/24 dev veth1

ip -n hol-test link set dev veth1 up

# launch a http server in namespace

ip netns exec hol-test bash -c "./my-http-server"

When working in the namespace, do not forget to prefix tc commands with ip netns exec hol-test tc .....

Running the load test

For the simplest way, just use a tool for load testing such as k6. The test does not have to be complicated, but keep in mind the tool needs to support the HTTP/2 protocol.

// script.js

import http from 'k6/http';

export const options = {

tlsAuth: [

{

domains: ['example.com'],

cert: open('../cert.pem'),

key: open('../cert-key.pem'),

},

],

};

export default function () {

const res = http.get('https://10.0.0.2:8000');

}

If your server supports both protocols, you can just toggle the protocol in k6's client by GODEBUG:

GODEBUG=http2client=0 k6 run --vus 1000 --duration 60s --summary-export="http1_summary.json" script.js

GODEBUG=http2client=1 k6 run --vus 1000 --duration 60s --summary-export="http2_summary.json" script.js

As a result, I just load-tested each case separately:

for ((i = 0 ; i <= 10 ; i++)); do

echo "Testing $i packet loss"

sudo ip netns exec hol-test tc qdisc add dev veth1 root netem loss ${i}%

GODEBUG=http2client=0 k6 run --vus 10 --duration 60s --summary-export="${i}-loss_http1.1_summary.json" script.js

GODEBUG=http2client=1 k6 run --vus 10 --duration 60s --summary-export="${i}-loss_http2_summary.json" script.js

sudo ip netns exec hol-test tc qdisc del dev veth1 root netem

done

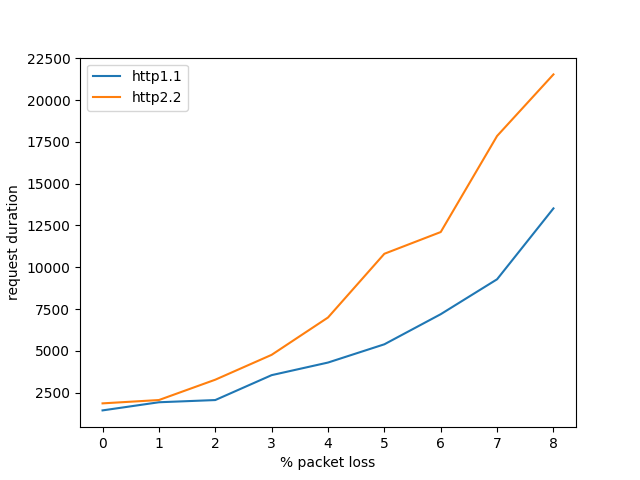

And plotted the summaries.

Result

As expected, we can see HTTP/1 starts to outperform HTTP/2 even when packet loss is very low.

You might experience different results depending on your environment, but overall, the busier the connection, the worse performance it will get with increasing packet loss.

The problem with HoL is solved by HTTP/3 and QUIC.